Codemod AI: Adopting Sonnet 3.5 as the Underlying Model

In the ever-evolving landscape of LLMs for software development, deploying AI solutions with interchangeable underlying models provides significant advantages. It allows for rapid adoption of the latest advancements. We designed Codemod AI with this principle in mind. We quickly switched the underlying model of Codemod AI from GPT-4o to Sonnet 3.5. The evaluation findings for this transition are presented in this article.

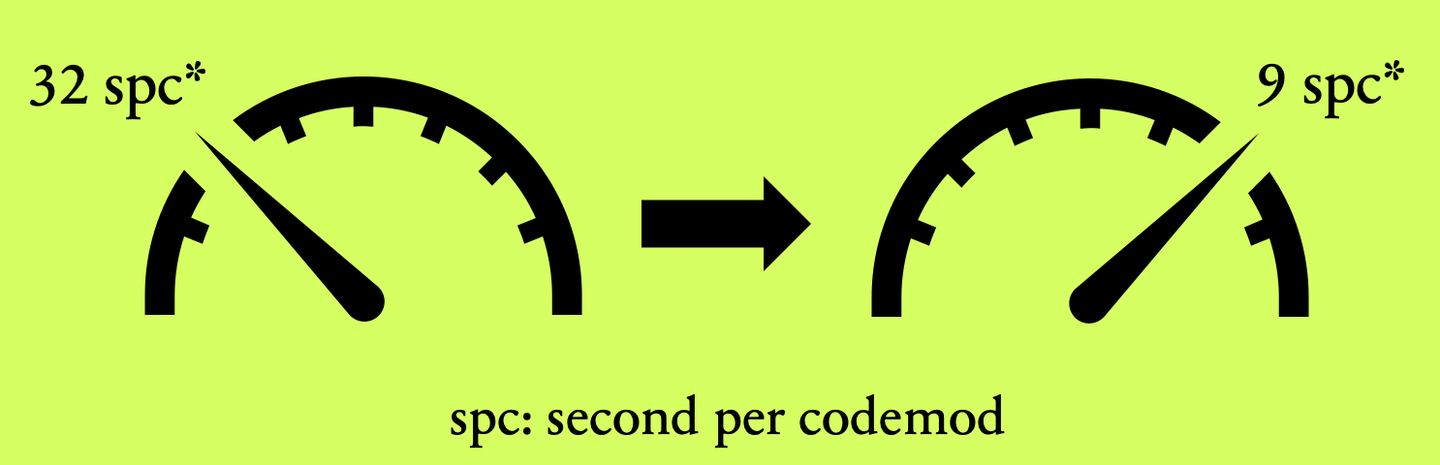

🔥The upgrade from GPT-4o to Sonnet 3.5 not only improved the accuracy from 75% to 77% but, more importantly, accelerated automatic codemod generation from before/after code examples by 3.5x.

Experimental Results Overview

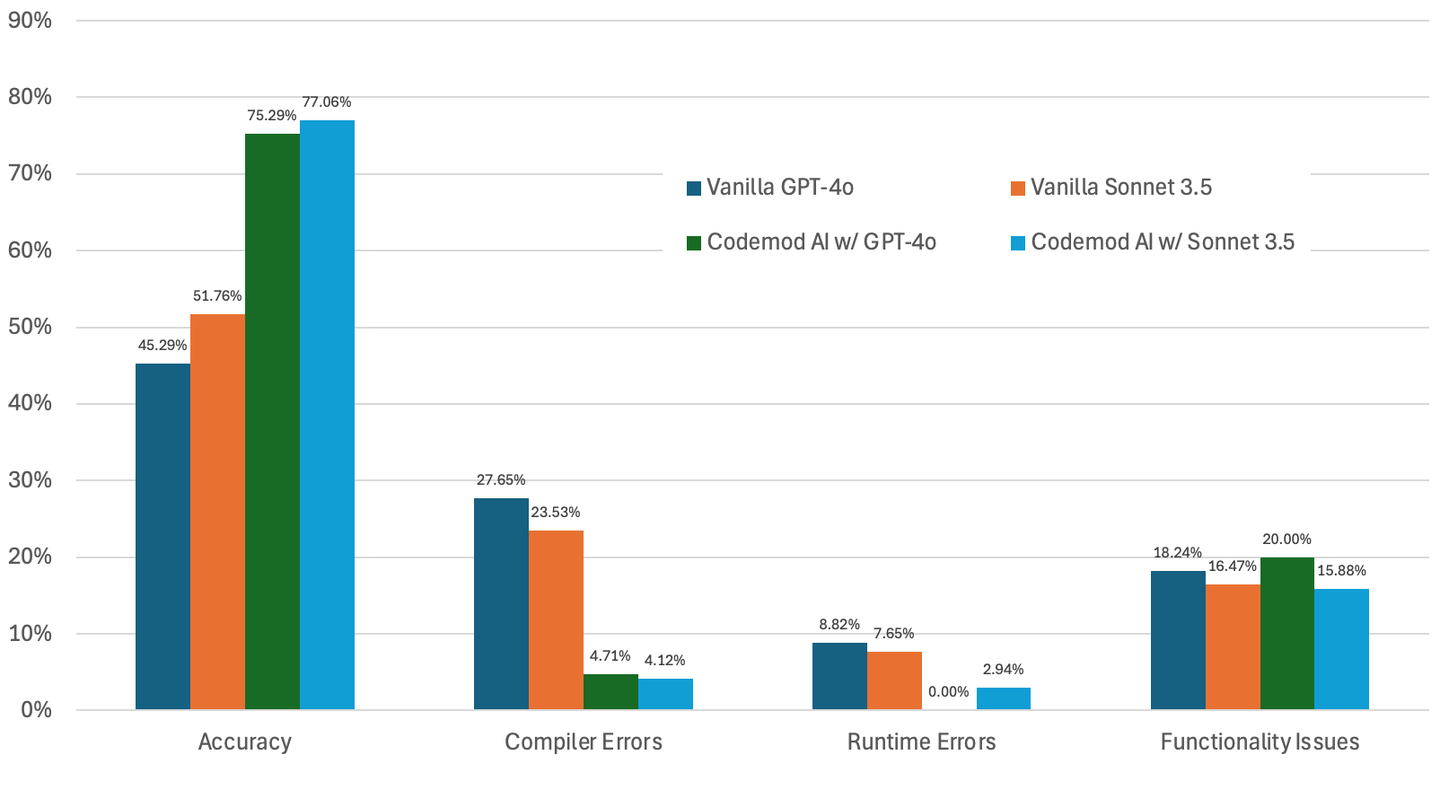

We conducted a study using 170 pairs of before and after code snippets, collected from Codemod’s public registry, comparing the performance of vanilla Sonnet 3.5, vanilla GPT-4o, and Codemod AI on top of each of these LLMs with three refinement iterations. All the results pertained to jscodeshift codemod generation. The findings were striking in terms of accuracy, error reduction, and efficiency.

Accuracy: The accuracy of vanilla Sonnet 3.5 was 51.76%, already surpassing GPT-4o's 45.29%. Codemod AI further improves these results with three refinement iterations to 77.06% and 75.29% for Sonnet 3.5 and GPT-4o, respectively.

Error Reduction: Without Codemod AI’s refinement iterations, vanilla Sonnet 3.5 generated 7 fewer codemods with compiler errors compared to vanilla GPT-4o. In terms of runtime errors and functionality issues, both models behaved roughly the same, with Sonnet 3.5 being slightly better. Using Codemod AI, compiler and runtime errors reduced significantly. Functionality errors, which occur when no compiler or runtime error is present but the expected output does not match the actual output, remained relatively stable, with a slight improvement from 16.47% to 15.88% when Sonnet 3.5 was used as the underlying model in Codemod AI.

Efficiency: In terms of speed, Codemod AI demonstrated a clear advantage with Sonnet 3.5, taking roughly 26 minutes to generate jscodeshift codemods for 170 pairs of before and after code examples. In contrast, GPT-4o required about 90 minutes for the same task. To give you a better sense, this change means that, on average, Codemod AI took around 32 seconds per codemod generation but now it takes around 9 seconds per codemod generation.

Moving Forward

Codemod AI, particularly when leveraging Sonnet 3.5, represents a significant leap forward in automatic codemod generation. The substantial improvements in accuracy, reduction in errors, and enhanced efficiency underscore the potential of Codemod AI to revolutionize the way developers transform their codebases. Developing more sophisticated reasoning methods to further reduce functionality issues in auto-generated codemods and expanding support for more codemod engines and programming languages are our two main goals going forward. Let us know if you would like to see a specific engine or programming language supported by Codemod AI.

Conclusion

Start migrating in seconds

Save days of manual work by running automation recipes to automate framework upgrades, right from your CLI, IDE or web app.